This week, world leaders and tech experts gathered at the historic Bletchley Park to discuss the opportunities and risks of artificial intelligence at the first-ever Global AI Safety Summit. While AI innovation presents immense potential, concerns remain about the possible misuse and unintended consequences of rapidly advancing AI systems. This includes the potential development of chemical or biological weapons.

Rather than producing any formal regulations, the summit focused on building consensus around responsible AI development. The discussions centered on Frontier AI systems that can perform a wide range of tasks at superhuman levels. Examples include large language models (LLMs) like ChatGPT which can generate remarkably human-like text.

Here, we provide answers to some of the common questions about this noteworthy summit:

Key Topics of Discussion:

The AI safety summit is primarily focused on Frontier AI systems, characterised as highly capable models that can outperform or match the abilities of the most advanced AI currently available. These encompass LLMs, such as ChatGPT and Google’s Bard. However, the central concern is directed towards future AI models set for release in the coming years and how they can be rigorously tested and monitored to prevent harm.

Who Will Be in Attendance?

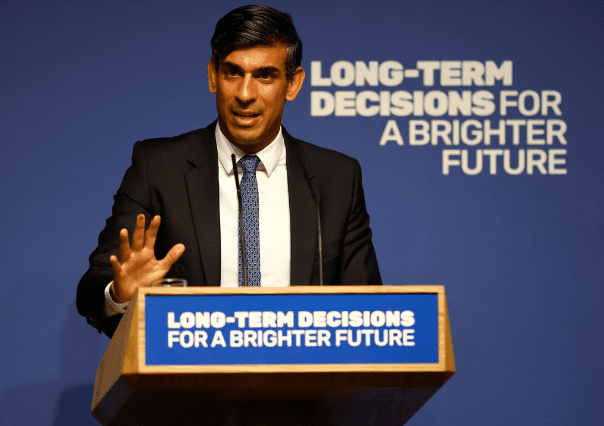

The summit will feature prominent figures, including UK Prime Minister Rishi Sunak and UK Technology Secretary Michelle Donelan. Among international dignitaries are U.S. Vice President Kamala Harris, European Commission President Ursula von der Leyen, and Italian Prime Minister Giorgia Meloni. Notably, some world leaders, including U.S. President Joe Biden, French President Emmanuel Macron, and Canadian Prime Minister Justin Trudeau, have opted not to participate.

The UK government is content with the diverse range of attendees from governments, industry, and civil society. The presence of Chinese government officials remains uncertain, despite public efforts to encourage their participation.

Representatives from the tech industry, including Google’s AI unit Google DeepMind, OpenAI (creator of ChatGPT), and Meta (led by Nick Clegg), will also take part. Eminent AI experts Geoffrey Hinton and Yoshua Bengio, often referred to as the “godfathers” of modern AI, are concerned about the pace of AI development and its associated risks, which they liken to threats on the scale of pandemics and nuclear war.

What’s on the Agenda?

The first day of the summit will address a range of AI-related risks, from national security threats to existential concerns about AI systems evading human control. In response to concerns about long-term threats overshadowing immediate issues, discussions will also explore immediate problems like AI-generated deepfakes, election disruption, the erosion of social trust, and global inequalities. The positive aspects of AI, particularly its potential in education, will also be examined.

U.S. Vice President Kamala Harris will deliver a speech outlining the Biden administration’s approach to AI. This speech is not considered a distraction from the summit’s goals, as Harris and Sunak are scheduled to meet for dinner. The White House has recently outlined its regulatory stance on AI, which includes the requirement for companies to share safety test results with the U.S. government before releasing AI models to the public.

On the second day, Rishi Sunak will lead a smaller assembly of foreign governments, companies, and experts to discuss concrete actions to address AI safety risks. He has proposed the establishment of an AI equivalent to the Intergovernmental Panel on Climate Change, producing annual reports on AI technology and associated risks.

Expected Outcomes:

While the summit will not establish a formal regulatory body for AI, Rishi Sunak aims to foster consensus on the risks associated with unrestricted AI development and the best approaches to mitigate these risks. Officials are working to finalise an official statement that highlights the nature of AI risks, particularly the potential for “catastrophic harm.”

There is an expectation that one or more AI developers may commit to slowing down their development of Frontier AI. The presence of major AI companies at the same forum is anticipated to exert collective pressure, encouraging coordinated action.

Sunak envisions this summit as the inaugural event in a series of regular international AI gatherings, following the template of G7, G20, and COP conferences. Overall, the summit represents an important step toward collective oversight of rapidly evolving AI.